Confluent Platform

Synopsis

This section shows how to launch the Webservice Data Source Sink Connector on a Confluent Platform running locally within a docker environment.

Preliminary Setup

- Make sure to have Docker Engine and Docker Compose installed on the machine where you want to run the Confluent Platform.

- Docker Desktop available for Mac and Windows includes both.

- Download and launch a ready-to-go Confluent Platform Docker image as described in Confluent Platform Quickstart Guide.

- Ensure that the machine where the Confluent Platform is running on has a connection to the SAP source.

- Ensure that you have a licensed version of the SAP Java Connector 3.1 SDK available.

Connector Installation

The connector can be installed either manually or through the Confluent Hub Client.

In both scenarios it is beneficial to use a volume to easily transfer the connector file into the Kafka Connect service container. If running Docker on a Windows machine make sure to add a new system variable COMPOSE_CONVERT_WINDOWS_PATHS and set it to 1.

Manual Installation

- Unzip the zipped connector

init-kafka-connect-webds-x.x.x.zip. - Get a copy of SAP Java Connector

v3.1.11SDK and move it to thelib/folder inside the unzipped connector folder. SAP JCo consists ofsapjco3.jar, and the native libraries likesapjco3.dllfor Windows OS orsapjco3.sofor Unix. Include e.g. the native libsapjco3.sonext to thesapjco3.jar. - Move the unzipped connector folder into the configured

CONNECT_PLUGIN_PATHof the Kafka Connect service. -

Within the directory where the

docker-compose.ymlof the Confluent Platform, is located you can start the Confluent Platform using Docker Compose.docker-compose up -d

Confluent Hub Client

Install the zipped connector init-kafka-connect-webds-x.x.x.zip using the Confluent Hub Client from outside the Kafka Connect docker container.

docker-compose exec connect confluent-hub install {PATH_TO_ZIPPED_CONNECTOR}/init-kafka-connect-webds-x.xx-x.x.x.zip

Further information on the Confluent CLI can be found in the Confluent CLI Command Reference.

Connector Configuration

The connector can be configured and launched using the control-center service of the Confluent Platform.

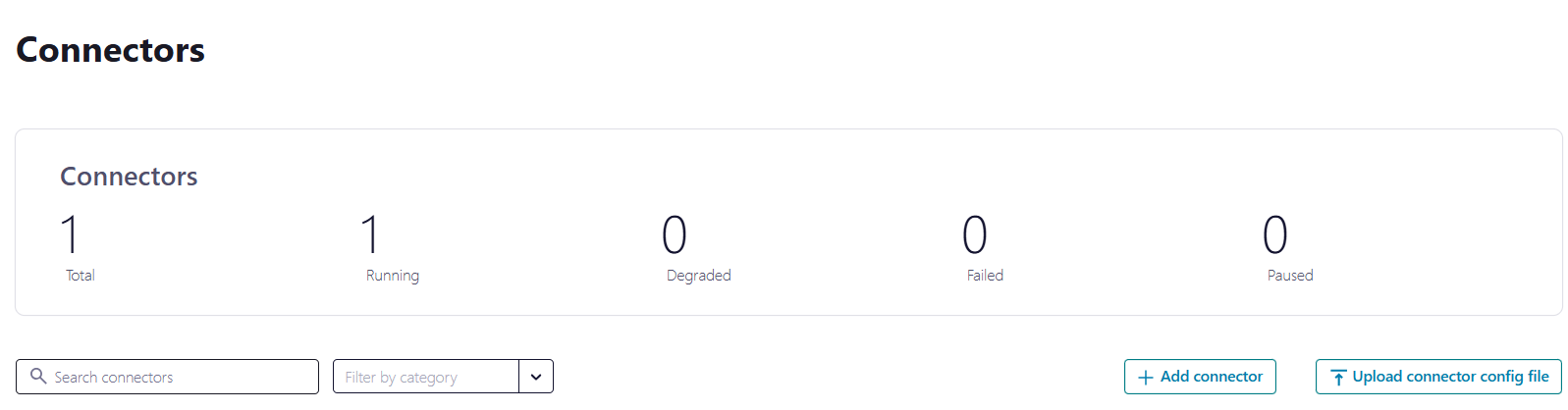

- In the control-center (default: localhost:9091) select a Connect Cluster in the Connect tab.

Choose a Connect Cluster. - Click the button “Add connector” and select WebDSSinkConnector.

Add a connector. - Enter a name and further required configuration for the connector.

To import data to the SAP® source using the Webservice Data Source Sink Connector, you need to follow these steps:

-

Transfer the minimal properties to the Confluent Control Center user interface. Remember to include your license key.

name = webds-sink-connector connector.class = org.init.ohja.kafka.connect.webds.sink.WebDSSinkConnector tasks.max = 1 sap.webds.license.key = "Your license key here" topics = ZWEBDSTEST sap.webds#00.system = TEST sap.webds#00.name = ZWEBDSTEST sap.webds#00.topic = ZWEBDSTEST jco.client.ashost = 127.0.0.1 jco.client.sysnr = 20 jco.client.client = 100 jco.client.user = user jco.client.passwd = password - Launch the sink connector.

- To test the sink connector, you need sample data.

Starting a Connector via REST Call

-

Save the example configuration JSON file into a local directory, e.g. named as

sink.webds.json. Remember to include your license key.{ "name": "webds-sink-connector", "config": { "connector.class": "org.init.ohja.kafka.connect.webds.sink.WebDSSinkConnector", "tasks.max": "1", "sap.webds.license.key": "Your license key here", "topics": "ZWEBDSTEST", "sap.webds#00.system": "TEST", "sap.webds#00.name": "ZWEBDSTEST", "sap.webds#00.topic": "ZWEBDSTEST", "jco.client.ashost": "127.0.0.1", "jco.client.sysnr": "20", "jco.client.client": "100", "jco.client.user": "user", "jco.client.passwd": "password" } } -

Once the configuration JSON is prepared, you can start the connector by sending it via a REST call to the Kafka Connect REST API. Use the following command to send a

POSTrequest:curl -X POST http://localhost:8083/connectors \ -H "Content-Type:application/json" \ -H "Accept:application/json" \ -d @sink.webds.json -

Once the connector is started successfully, the Kafka Connect REST API will return a response in JSON format with details about the connector, including its status and any potential errors. You can verify that the connector is running by checking its status:

curl -X GET http://localhost:8083/connectors/webds-sink-connector/statusThis will return a JSON object indicating whether the connector is running, its tasks, and any associated errors.

- Entering user credentials: To prevent from SAP® user from being locked out during the connector configuration process, it is recommended to enter the

jco.client.userandjco.client.passwdproperties in theJCo Destinationconfiguration block with caution since the control-center validates the configuration with every user input, which includes trying to establish a connection to the SAP® system. - Get recommended values automatically: If you enter the properties in the

JCo Destinationconfiguration block first, value recommendations for some other properties will be loaded directly from the SAP source system. - Display advanced JCo configuration properties: To display the advanced JCo configuration properties in the Confluent Control Center UI, you can set the configuration property

Display advanced propertiesto1. - Configure multiple

Webservice Data Sources: Since you can push data to multiple webservice data sources in one connector instance, an additionalWebservice Data Sourceconfiguration block will appear once you provided the required information for the firstWebservice Data Sourceconfiguration block. - Selection limits in Confluent Control Center UI: The number of configurable webservice data sources in the Confluent Control Center UI for one connector instance is restricted.