Confluent Platform

Synopsis

This section shows how to launch the OData V2 Connectors on a Confluent Platform running locally within a docker environment.

Preliminary Setup

- Make sure to have Docker Engine and Docker Compose installed on the machine where you want to run the Confluent Platform.

- Docker Desktop available for Mac and Windows includes both.

- Download and launch a ready-to-go Confluent Platform Docker image as described in Confluent Platform Quickstart Guide.

- Ensure that the machine where the Confluent Platform is running on has a network connection to the publicly available (read only) Northwind V2 and (read/write) OData V2 services.

Connector Installation

The OData V2 Connectors can be installed either manually or through the Confluent Hub Client.

In both scenarios it is beneficial to use a volume to easily transfer the connector file into the Kafka Connect service container. If you´re running Docker on a Windows machine make sure to add a new system variable COMPOSE_CONVERT_WINDOWS_PATHS and set it to 1.

Manual Installation

- Unzip the zipped connector package

init-kafka-connect-odatav2-x.x.x.zip. - Move the unzipped connector folder into the configured

CONNECT_PLUGIN_PATHof the Kafka Connect service. -

Navigate to the directory containing the

docker-compose.ymlfile of the Confluent Platform and use Docker Compose to start the platform.docker-compose up -d

Confluent CLI

Install the zipped connector package init-kafka-connect-odatav2-x.x.x.zip using the Confluent Hub Client from outside the Kafka Connect docker container:

confluent connect plugin install {PATH_TO_ZIPPED_CONNECTOR}/init-kafka-connect-odatav2-x.x.x.zip

Further information on the Confluent CLI can be found in the Confluent CLI Command Reference.

Connector Configuration

The OData V2 Connectors can be configured and launched using the control-center service of the Confluent Platform.

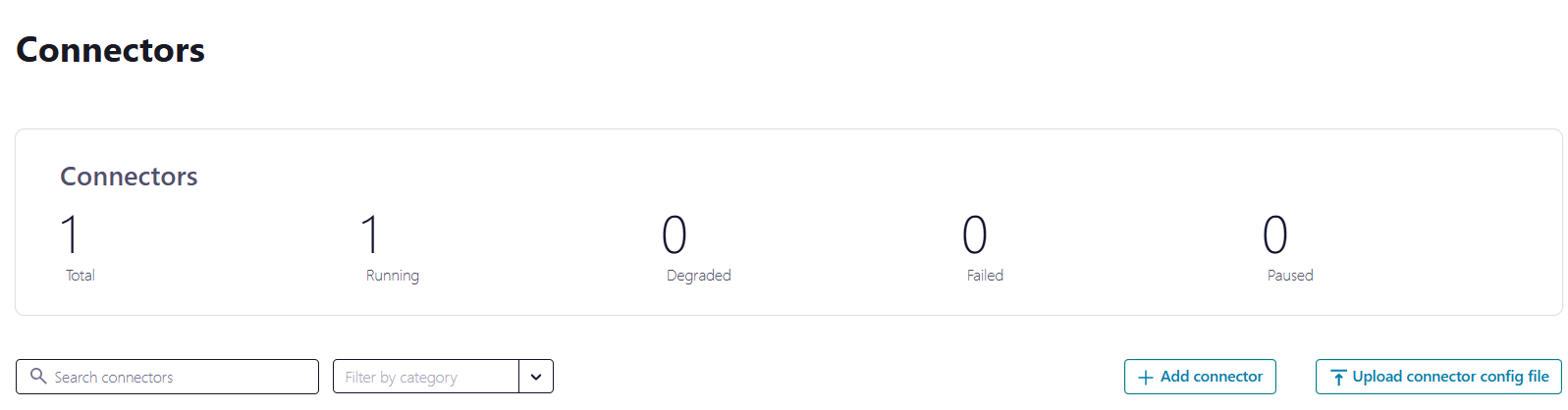

- In the control-center (default: localhost:9091) select a Connect Cluster in the Connect tab.

Choose a Connect Cluster. - Click the “Add connector” button and choose either

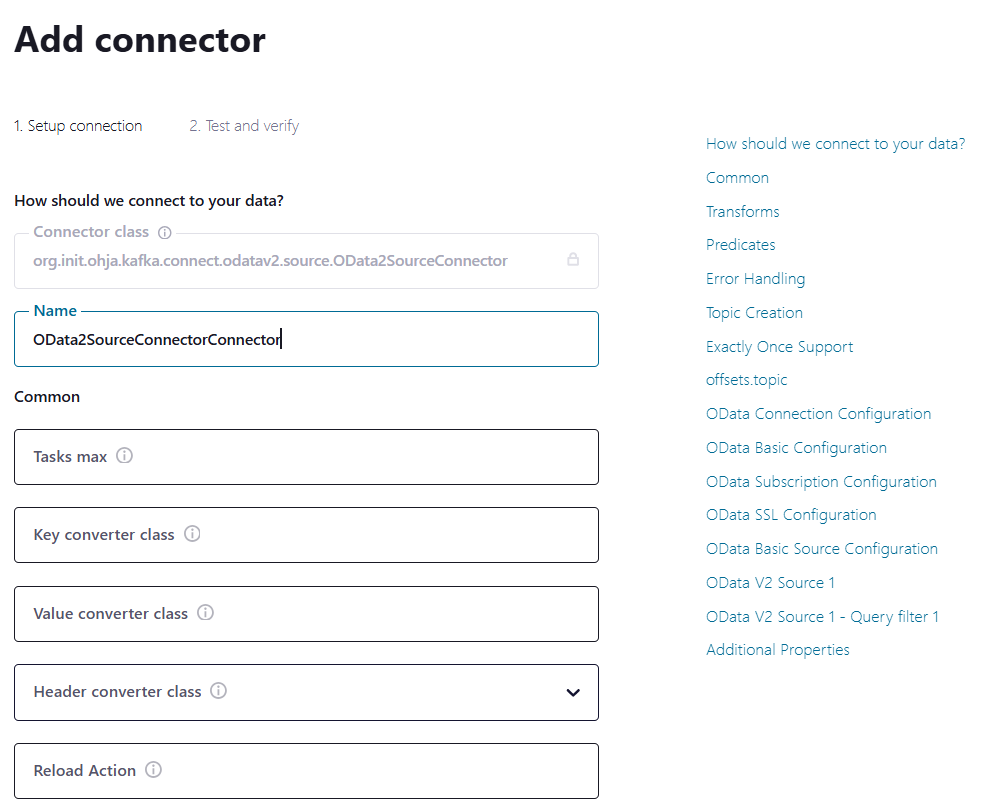

OData2SourceConnectororOData2SinkConnector.

Add a connector. - Provide a name for the connector and complete any additional required configuration.

OData V2 Source Connector

For the OData V2 Source Connector to extract data from the Northwind V2 service, you need to follow these steps:

-

Transfer the properties including a minimal configuration to the control-center user interface. Remember to include your license key.

Insert the properties. name = odata-source-connector connector.class = org.init.ohja.kafka.connect.odatav2.source.OData2SourceConnector tasks.max = 1 sap.odata.license.key = "Your license key here" sap.odata.host.address = services.odata.org sap.odata.host.port = 443 sap.odata.host.protocol = https sap.odata#00.service = /V2/Northwind/Northwind.svc sap.odata#00.entityset = Order_Details sap.odata#00.topic = Order_Details -

Launch the source connector.

OData V2 Sink Connector

To export data from the OData V2 Sink Connector to the OData V2 service, you need to follow these steps:

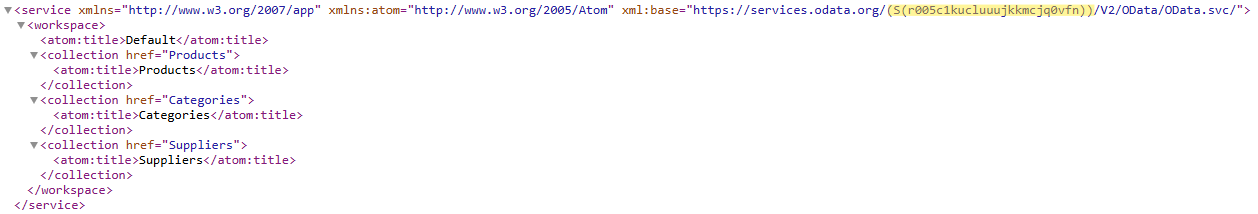

- Open OData V2 service in a browser to obtain a temporary service instance URL that allows write operations. The URL will include a

temporary service IDthat replaces the S(readwrite) part of the URL.

Copy the temporary service ID. - Transfer the properties to the Confluent Control Center user interface. Remember to include the service ID in the service path as well as including your license key.

Insert the properties. name = odata-sink-connector connector.class = org.init.ohja.kafka.connect.odatav2.sink.OData2SinkConnector topics = Suppliers tasks.max = 1 sap.odata.license.key = "Your license key here" sap.odata.host.address = services.odata.org sap.odata.host.port = 443 sap.odata.host.protocol = https sap.odata#00.service = /{ENTER SERVICE ID HERE}/V2/OData/OData.svc/ sap.odata#00.entityset = Suppliers sap.odata#00.topic = Suppliers - Launch the sink connector.

- To test the sink connector, you need sample data. You can obtain sample data for all entities from the target OData service instance. You can then import this data into Kafka using the OData V2 Source Connector.

Starting a Connector via REST Call

-

Save the example configuration JSON file into a local directory, e.g. named as

source.odatav2.json. Remember to include your license key.{ "name": "odata-source-connector", "config": { "connector.class": "org.init.ohja.kafka.connect.odatav2.source.OData2SourceConnector", "tasks.max": "1", "sap.odata.license.key": "Your license key here", "sap.odata.host.address": "services.odata.org", "sap.odata.host.port": "443", "sap.odata.host.protocol": "https", "sap.odata#00.service": "/V2/Northwind/Northwind.svc", "sap.odata#00.entityset": "Order_Details", "sap.odata#00.topic": "Order_Details" } } -

Once the configuration JSON is prepared, you can start the connector by sending it via a REST call to the Kafka Connect REST API. Use the following command to send a POST request:

curl -X POST http://localhost:8083/connectors \ -H "Content-Type:application/json" \ -H "Accept:application/json" \ -d @source.odatav2.json -

Once the connector is started successfully, the Kafka Connect REST API will return a response in JSON format with details about the connector, including its status and any potential errors. You can verify that the connector is running by checking its status:

curl -X GET http://localhost:8083/connectors/odata-source-connector/statusThis will return a JSON object indicating whether the connector is running, its tasks, and any associated errors.

- Configure multiple

OData Sources: When you use a single connector instance to poll data from different service entity sets, an extra configuration block for anOData Sourcewill appear after you’ve provided the necessary information for the previousOData Sourceblock. - Selection limits in Confluent Control Center UI: There’s a limit to the number of

OData Sourcethat can be configured in the Confluent Control Center UI for one connector instance. If you need to configure additional sources beyond this limit, you can do so in the UI without any recommendations by using theAdditional Propertiessection. - The same rule applies to the number of query filter conditions and

OData Sinks.

The public OData V2 service has restrictions on write operations, which include:

- The service only supports content-type set to

application/atom+xml. - The service has a limit of 50 entities per entity set that can be written at once.

- String properties have a maximum length of 256 characters for write operations.